dbinom(x=3,size=10,prob=0.1)

#> [1] 0.05739563What is Bayesian inference?

A reminder on conditional probabilities

The ordering matters:

Screening for vampirism

The chance of the test being positive given you are a vampire is

The chance of a negative test given you are mortal is

What is the question?

From the perspective of the test: Given a person is a vampire, what is the probability that the test is positive?

From the perspective of a person: Given that the test is positive, what is the probability that this person is a vampire?

Assume that vampires are rare, and represent only

What is the answer? Bayes’ theorem to the rescue!

let’s have an example

Screening for vampirism

Suppose the diagnostic test has the same sensitivity and specificity but vampirism is more common:

What is the probability that a person is a vampire, given that the test is positive?

The probability that a person is a vampire, given that the test is positive

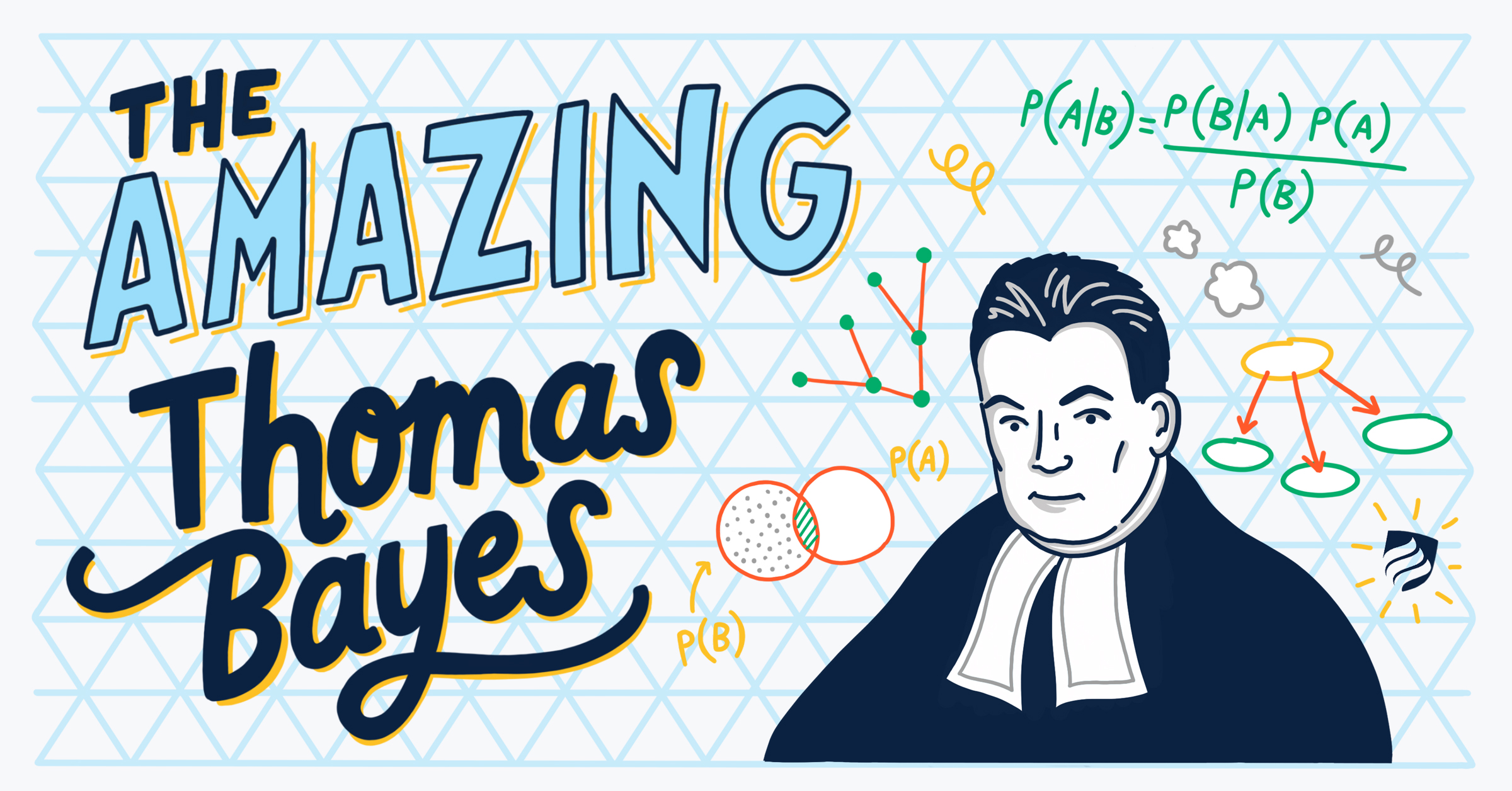

Bayes’ theorem

A theorem about conditional probabilities.

- Easy to mess up with letters. Might be easier to remember when written like this:

The “hypothesis” is typically something unobserved or unknown. It’s what you want to learn about using the data.

For regression models, the “hypothesis” is a parameter (intercept, slopes or error terms).

Bayes theorem tells you the probability of the hypothesis given the data.

Frequentist versus Bayesian

Typical stats problems involve estimating parameter

The frequentist approach (maximum likelihood estimation – MLE) assumes that the parameters are fixed, but have unknown values to be estimated.

Classical estimates generally provide a point estimate of the parameter of interest.

The Bayesian approach assumes that the parameters are not fixed but have some fixed unknown distribution - a distribution for the parameter.

What is the Bayesian approach?

The approach is based upon the idea that the experimenter begins with some prior beliefs about the system.

And then updates these beliefs on the basis of observed data.

This updating procedure is based upon the Bayes’ Theorem:

Schematically if

The Bayes’ theorem

- Translates into:

Bayes’ theorem

Likelihood

Usually, when talking about probability distributions, we assume that we know the parameter values.

In the real world, it is usually the other way around.

A question of interest might be for example:

We have observed 3 births by a female during her 10 breeding attempts. What does this tell us about the true probability of getting a successful breeding attempt from this female? For the population?

We don’t know what the probability of a birth is.

But we can calculate the probability of getting our data for different values:

We don’t know what the probability of a birth is.

But we can see what the probability of getting our data would be for different values:

dbinom(x=3,size=10,prob=0.9)

#> [1] 8.748e-06We don’t know what the probability of a birth is.

But we can see what the probability of getting our data would be for different values:

dbinom(x=3,size=10,prob=0.25)

#> [1] 0.2502823So we would be more likely to observe 3 births if the probability is 0.25 than 0.1 or 0.9.

The likelihood

This reasoning is so common in statistics that it has a special name:

The likelihood is the probability of observing the data under a certain model.

The data are known, we usually consider the likelihood as a function of the model parameters

A Formula for the Likelihood Function

Let

Likelihood functions in R

We may create a function to calculate a likelihood:

Maximize the likelihood (3 successes ot of 10 attempts)

The maximum of the likelihood is at value

Maximum likelihood estimation

There is always a set of parameters that gives you the highest likelihood of observing the data, and this is the MLE.

-

These can be calculated using:

- Trial and error (not efficient!).

- Compute the maximum of a function by hand (rarely doable in practice).

- An iterative optimization algorithm:

?optiminR.

By hand:

compute MLE of

from with successes

We are searching for the maximum of

Compute derivate w.r.t.

Then solve

Here, the MLE is the proportion of observed successes.

Using a computer:

MLE of

from with successes

Use optim when the number of parameters is

The Bayesian approach

The Bayesian starts off with a prior.

Now, the one thing we know about

Thus, a suitable prior distribution might be the Beta defined on

What is the Beta distribution?

What is the Beta distribution?

with

Beta Beta Conjugate Prior

- We assume a priori that (let

also :

- Then we have:

- That is:

- Take a Beta prior with a Binomial likelihood, you get a Beta posterior (conjugacy)

Often, one has a belief about the distribution of one’s data. You may think that your data come from a binomial distribution and in that case you typically know the

Bayesians express their belief in terms of personal probabilities. These personal probabilities encapsulate everything a Bayesian knows or believes about the problem. But these beliefs must obey the laws of probability, and be consistent with everything else the Bayesian knows.

So a Bayesian will seek to express their belief about the value of

Bayesians express their uncertainty through probability distributions.

One can think about the situation and self-elicit a probability distribution that approximately reflects his/her personal probability.

One’s personal probability should change according Bayes’ rule, as new data are observed.

The beta family of distribution can describe a wide range of prior beliefs.

Conjugacy

Next, let’s introduce the concept of conjugacy in Bayesian statistics.

Suppose we have the prior beliefs about the data as below:

Binomial distribution

Prior belief about

Then we observe

This is an example of conjugacy. Conjugacy occurs when the posterior distribution is in the same family of probability density functions as the prior belief, but with new parameter values, which have been updated to reflect what we have learned from the data.

Why are the beta binomial families conjugate? Here is a mathematical explanation.

Recall the discrete form of the Bayes’ rule:

However, this formula does not apply to continuous random variables, such as the

But the good news is that the

This is analogus to the discrete form, since the integral in the denominator will also be equal to some constant, just like a summation. This constant ensures that the total area under the curve, i.e. the posterior density function, equals 1.

Note that in the numerator, the first term,

In the beta-binomial case, we have

Plugging in these distributions, we get

$$$$

Let

same as the posterior formula in Equation.

We can recognize the posterior distribution from the numerator

This is a cute trick. We can find the answer without doing the integral simply by looking at the form of the numerator.

Without conjugacy, one has to do the integral. Often, the integral is impossible to evaluate. That obstacle is the primary reason that most statistical theory in the 20th century was not Bayesian. The situation did not change until modern computing allowed researchers to compute integrals numerically.

Three Conjugate Families

In this section, the three conjugate families are beta-binomial, gamma-Poisson, and normal-normal pairs. Each of them has its own applications in everyday life.

The Gamma-Poisson Conjugate Families

The Poisson distribution has a single parameter

where

Note that

The gamma family is flexible, and Figure below illustrates a wide range of gamma shapes.

The probability density function for the gamma is indexed by shape

However, some books parameterize the gamma distribution in a slightly different way with shape

For this example, we use the

In the the later material we find that using the rate parameterization is more convenient.

For the gamma Poisson conjugate family, suppose we observed data

The Normal-Normal Conjugate Families

There are other conjugate families, and one is the normal-normal pair. If your data come from a normal distribution with known variance

As a practical matter, one often does not know

For the normal-normal conjugate families, assume the prior on the unknown mean follows a normal distribution, i.e.

Then the posterior distribution of

where

Example: Numerical Example

Suppose we have a sample of size

Credible and Confidence Intervals

credible intervals, the Bayesian alternative to confidence intervals. Confidence intervals,are the frequentist way to express uncertainty about an estimate of a population mean, a population proportion or some other parameter.

A confidence interval has the form of an upper and lower bound.

where

Most importantly, the interpretation of a 95% confidence interval on the mean is that “95% of similarly constructed intervals will contain the true mean”, not “the probability that true mean lies between

The reason for this frequentist wording is that a frequentist may not express his uncertainty as a probability. The true mean is either within the interval or not, so the probability is zero or one. The problem is that the frequentist does not know which is the case.

On the other hand, Bayesians have no such qualms. It is fine for us to say that “the probability that the true mean is contained within a given interval is 0.95”. To distinguish our intervals from confidence intervals, we call them credible intervals.

A confidence interval of X% for a parameter is an interval

Say you performed a statistical analysis to compare the efficacy of a public policy between two groups and you obtain a difference between the mean of these groups.You can express this difference as a confidence interval. Often we choose 95% confidence.This means that